304 stainless steel heating element

English manual&cookbook

Air Cooker Fryer,Air Fryer Oil Free,Oil Free Air Fryer,Electric Oil Free Air Fryer Ningbo Huayou Intelligent Technology Co. LTD , https://www.homeapplianceshuayou.com

The storms that have disappeared from the virtual world of virtual reality are now getting stronger, ranging from helmets and glasses of all shapes, to interactive devices of all kinds, to content creation and the establishment of an experience hall. More and more VR-related practitioners and entrepreneurs have made The Void, Zero Latency and other seniors as their next step to catch up, and have issued a declaration to develop VR theme parks or VR theme experience solutions.

However, from a macro perspective, the entire industry is not as smooth as the development: the level of VR helmets and glasses is uneven, mainstream products (such as OculusVR and HTC Vive) have not yet fully launched the consumer market; VR requirements for system configuration is very High, related equipment is not easy to wear, and the rendering of mobile devices is mostly incompetent; how to create interactive content for the experience hall, how to design the interaction, there are many unclear places. In addition, the most important part of the construction of the VR Experience Museum is the cheap, flexible and accurate positioning solution.

The positioning mentioned here is to determine the absolute spatial position of the participants in the experience hall in the stadium, and feedback to all players participating in the game and the game server, and then execute the various game logic necessary for the group game.

For example, when a player is near the edge of the woods, a long-planned hungry wolf will rush out; for example, a number of players will launch a virtual reality CS contest to shoot and conduct tactics. If the player's position within the playing field cannot be identified effectively, the corresponding fun and complexity will naturally be much less. Maybe it is only a first-person fixed-point shooting game. The positioning accuracy and speed must not be ignored. The 20cm error may determine whether the projected bullet can penetrate the virtual enemy's chest, and the delay caused by the positioning itself will also bring the sense of presence to the virtual reality player. The huge influence has even become an accomplice to 3D motion sickness.

However, looking at the various VR spatial positioning schemes currently available in the market, none of them can provide enough mature and stable technology to achieve, or construct unsuccessful demo works with the cost and loss of flexibility. I am afraid that this level is far from being a solution, and it is far from satisfying the appetite of many practitioners of the experience hall. So, what kind of criteria should be used as a goal for a good positioning plan? Has the missing savior in the entire industry come? This article will try to elaborate on this.

(I) Somatosensory camera At the end of last year, a one-day VR experience took place in the bustling area of ​​Shinjuku, Japan. A virtual snow mountain scene was displayed by wearing Gear VR. At the same time, solid suspension bridge props were built and simulated by fans and cold air conditioners. Cold mountain environment. The experiencer trudged forward on the suspension bridge and eventually got his own prize at the end of the venue - a hot drink from the dealer.

(Via v7.pstatp.com)

The complexity of this experience game is not high, but the effect is very significant. One of the indispensable elements is to determine where the player is walking on the suspension bridge, and then make corresponding processing of the scene rendered in Gear VR. In the same promotional video, we can easily find such a clue:

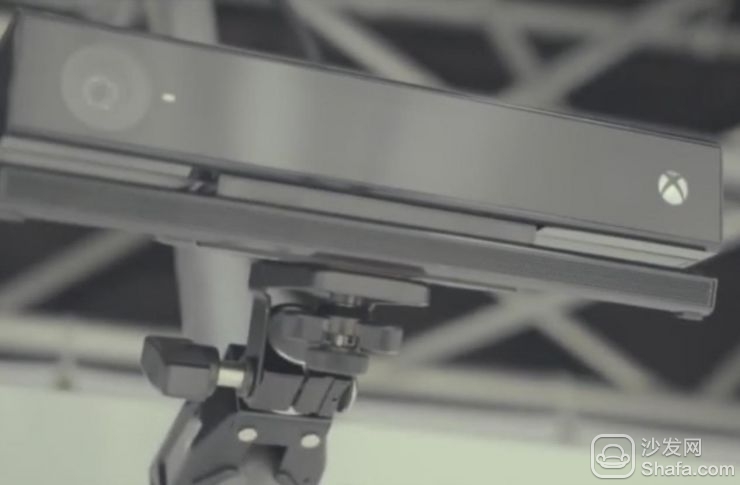

(Via v7.pstatp.com)

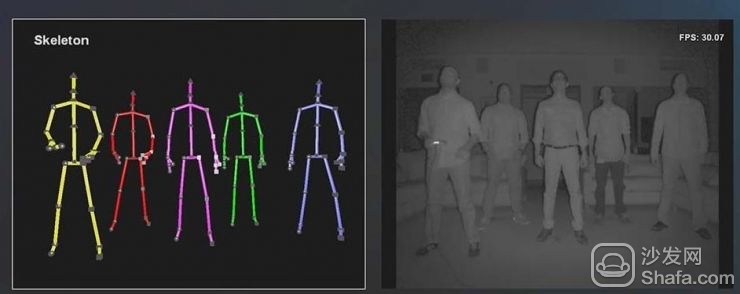

Obviously this is a Microsoft Kinect2 somatosensory device. It can use the TOF (Time of Flight) calculation method to obtain the phase difference of the reflected light from the sensor when it encounters the object, so as to obtain the distance between the device and the actual scene. It is the depth. The resulting depth image can be used not only to determine the position of an object relative to a somatosensory device, but also to further obtain the point cloud data of the object, even the character's skeleton information. Most importantly, the Kinect2 itself is just an optional peripheral for the XBox One console, so buying it and using it for simple spatial positioning of VR content will never cost too much.

(Via edgefxkits.com)

However, the opposite of inexpensive is the poor performance of the performance parameters, refresh rate of 30fps must be able to more clearly feel the delay of the positioning results (although this delay is less impact than the helmet delay) , And Kinect's field of view is only about 60 degrees, the maximum recognition range is generally 3-4 meters. In this area, the position information of up to 6 people can be identified, and they cannot have too much overlap in the field of view of Kinect, so as not to miss the test (as shown in the above figure). Obviously, these harsh restrictions make it difficult to imagine a more complicated game than climbing snow-capped mountains, but at least this is a good start.

(b) Optical positioning and image recognition There was a last year's news that should not be unfamiliar to most VR practitioners: Zero Latency from Australia became the world’s first virtual reality gaming experience center, which occupies 400 square meters. About meters, consists of 129 PS Eye cameras, and supports 6 players playing games together...

Yes, the biggest selling point of this experience center compared to previous VR applications is that it can walk freely in virtual space. The PS Move device (including the PS Eye camera and the Move handle equipped with a marker light ball) is the core of this technology.

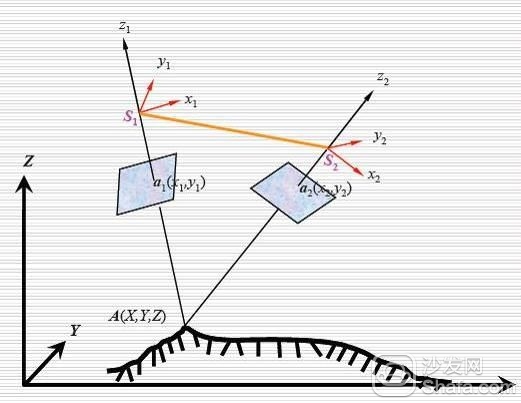

As shown in the previous game renderings, different colors of light balls can present images that are significantly different from the background image in the PS Eye camera, allowing us to extract it by computer vision (CV) algorithms. Of course, with only one PS Eye camera, it is impossible to get player's three-dimensional spatial information. At this point, more than one camera is required to capture the position of the player's photosphere in the screen space, and then through the aerial triangulation algorithm, the player's real position in the world coordinate system is obtained.

Naturally, this brings out two more serious problems:

First, how to accurately and steadily determine and distinguish different light spheres (points) from the camera screen;

Second, how to know the position and posture of the camera itself in the world space, so as to accurately calculate the player's position and posture.

In order to solve the first problem described here, it has been enough for countless developers to rack their brains and go ahead. It is of course feasible to distinguish the marking points by color, but what if another similar color interference is present in the camera? Or what if the scene is a colorful environment? At this time, misidentification is probably difficult to avoid. As a result, a group of optical motion capture providers stood up and they chose to use infrared cameras as an alternative to identification.

Of course, the oldest OptiTrack is debuted here. They use a professional camera with a frame rate of more than 100Hz and use a global shutter system, which effectively avoids the phenomenon of motion blur on high-speed moving objects. Infrared LEDs are used around the camera to fill light, and high-reflectivity materials are used to create markers for players to wear. Because the infrared camera itself has shielded most of the visible light information, the marked points will appear to be significantly out of the picture. Unless someone uses another infrared light source to interfere, it is almost impossible to misjudge.

According to currently known information, including The Void theme park and Nolita's Project Alice, they all use OptiTrack's spatial positioning scheme, which undoubtedly proves the reliability of this program. However, the relative cost is often high. (As shown in the figure below, the price of a camera is calculated in tens of thousands of renminbi, while the construction of a rule space requires at least four such cameras and software systems).

(Via)

However, since a non-luminous marker ball is used instead of PS Move's photosphere solution (the other benefit is that there is no need to consider how to power the marker ball), then how to distinguish the ID of the marker ball and distinguish more in the game Players? There are also many methods, for example, by adjusting the reflectivity, let the marker ball display different brightness in the camera screen; or use different combinations to let a group of light balls show a unique combination of forms, as shown below Show:

(Via)

The second question mentioned earlier: how to know the position and posture of the camera itself in world space. In fact, it is done through pre-calibration. The builders of the experience hall installed each camera in a fixed position in advance, and then observed each of them on the screen. The position and posture of each camera is calculated by some markers of the known position and posture, and saved. This process is undoubtedly cumbersome and boring, especially when you need to deploy hundreds of cameras. After the setting is completed, how to avoid the camera from being moved again, or because of the structure of the stadium and the vibration and offset occurs, which is another device maintenance problem that every developer has to face.

However, because the optical positioning method has considerable accuracy and stability, the adjustment of the camera parameters can also achieve very low delay, and can theoretically be extended to an infinite space, so it has indeed become a lot of current VR The first choice for experience pavilion builders. However, identifying multiple players by marking points is still very limited, because the marker points cannot be combined indefinitely, and if the two marker points are too close together (for example, two players fighting back to back), it is easy to happen. Mismeasured or unrecognized situation. In addition, an overly complex stadium environment will make marker points more easily obstructed by obstructions and missed measurement problems. Therefore, many of the optical experience locations we have seen so far are in an empty regular room. Game.

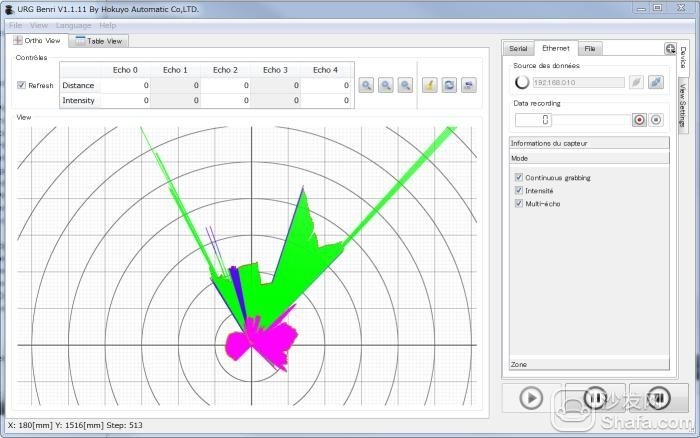

(C) The laser radar laser itself has a very precise ranging capability, and the ranging accuracy can reach up to millimeters. Generally, the two-dimensional laser radar produced by the Japanese manufacturers such as Japan's HOKUYO Beiyang Electric and Germany's SICK SIAK is common. .

The two-dimensional meaning is that the light emitted by such a laser radar is a fan-shaped plane, and a variety of three-dimensional laser radars used for mapping purposes or used as a three-dimensional reconstruction in the construction industry are such two-dimensional laser radars. One dimension does the rotation, which results in three-dimensional space.

The lidar includes a single beam narrowband laser and a receiving system. The laser generates and emits a pulse of light that hits an object and reflects it back. It is finally received by the receiver. The receiver accurately measures the time of flight of the light pulse from emission to being reflected back, ie TOF (Time of Flight). Because the light pulse travels at the speed of light, the receiver always receives the previous reflected pulse before the next pulse. Given that the speed of light is known, the travel time can be converted to a measure of distance. Because the light speed is measured by a ruler, the accuracy of the laser radar is generally quite high. For indoor applications, the error is in the millimeter class.

The two-dimensional laser radar is actually a fan-shaped surface formed by a one-dimensional single-beam laser rotating on a rotating base. A two-dimensional laser radar can use its own centered, tens of meters to draw a fan-shaped surface for measurement, so if If someone is active in this area, Lidar can accurately know the position of a person and output it to the computer. Of course, LiDAR can also be used as a obstacle avoidance sensor for robot research.

The rotation speed of the rotating base is also divided into many specifications, so the laser radar also has the difference of the scanning frequency. Normally, the scanning frequency of several tens of Hz is enough for us to do position detection in the VR.

However, the working principle of laser radar has a high requirement for components, and it usually operates under very severe conditions. It requires waterproof, dustproof, and high reliability of tens of thousands of hours without failure. Therefore, the production cost is not cheap. And the higher the scanning frequency, the more distant the detection distance (that is, the greater the transmission power) is, the more expensive the lidar is. Therefore, the price of two-dimensional laser radar is not cheap (in the range of nearly ten thousand yuan to several ten thousand yuan), and the airborne three-dimensional laser radar used for surveying and mapping is not something that ordinary people can care about (hundreds of thousands to millions).

In addition to the price factor, there is still a major problem with the use of laser radar as a positioning device: Because the laser emits a fan-shaped light, if there are a lot of moving objects crowded together, there will be mutual obscuration and behind objects. In the "shadow area" of the front object, it is not detected. Lidar can only measure distance and cannot identify the ID of an object. Therefore, even if price factors are not considered, it is more suitable for playing in a single situation. If you want a group, you must use other solutions.

(d) HTC Vive: Light House

During this time, the sale of HTC Vive became a big news for the entire industry. And it uses a different positioning method than the optics is also the place people talk about. The HTC Vive includes three main parts, a helmet and a handle that are covered with infrared sensors, and Light House, which is used for positioning. The player pre-arranged Light House into two corners of an empty room. The two Light Houses correspond to two fixed laser emitting base stations, as shown in the following figure.

After Light House's back cover opens, it looks like this:

The dense LED is used to synchronize the beam. The two cylinders are rotating one-word lasers. One is the X-axis sweep and the other is the Y-axis sweep. The two lasers have a fixed phase difference of 180 degrees. That is, when A is bright, B is not bright, and when B is bright, A is not bright.

Both the handle and the helmet have a fixed position mounted light sensor:

The specific workflow of this system is divided into three steps:

1. Synchronization: The LED light board is illuminated once and the handle and the helmet's sensor are illuminated together as a synchronization signal.

2. X-Axis Scanning: A horizontal laser illuminates the handle and a light sensor on the helmet.

3, Y-axis scanning: vertical word laser irradiation handle and light sensor on the helmet.

Headlights and controllers are equipped with a number of light sensors. After the LED of the base station flashes, the time of all devices will be automatically synchronized, and then the laser will begin to scan. At this time, the light sensor can measure the time when the X-axis laser and the Y-axis laser respectively reach the sensor.

In other words, the laser sweeps through the sensor in a sequential order. Therefore, there is a sequential relationship between the timing of several sensors sensing the signal, and the X-axis and Y-axis angles of the sensors relative to the base station are also known. The position of the sensor on the head and the handle has been calibrated in advance, and the position is fixed. In this way, according to the positional difference of each sensor, the position and the trajectory of the head display and the handle can be calculated.

The biggest advantage of Light House is that it requires very little computational effort. This is unlike the CV vision system which needs to be imaged first, then the features in the image are resolved by the software. The more detailed the image is, the higher the required image processing capability is. Light House uses only photosensors and does not require imaging. It does not involve large amounts of computation and image processing, avoiding performance loss and instability.

On the other hand, the large amount of calculations often means that the delay will be higher, and can not be completed by the embedded processor. Because Light House has a small amount of computation, the embedded system can calculate and process itself, and then directly transfer the location data to the PC, saving a lot of time spent on transmission and processing.

However, although Light House is by far the best VR interactive positioning device, due to the limitation of the laser's power to the human eye, it can cover a relatively limited distance, which is probably an area of ​​5M 5M square, and There must not be too many obstructions leading to no signal reception. And the installation and debugging of this kind of equipment is still relatively tedious and may be difficult for the average user.

Removable frying pot&basket with non-stick coating

Removable and heat insulation handle for frying basket

Automatic shut-off with ready alert

Prevent slip feet

Heat resistant material inside enclosure

With fan guard,more safety

Certificates: GS CE CB SAA RoHS LFGB

Colour: customized

7 kinds of weapon for VR to realize spatial positioning

The construction of the existing VR experience hall still lacks the most important link. It is a cheap, flexible and accurate positioning solution. This article will explain the advantages and disadvantages of some existing solutions. This article is divided into the next chapter.